When you are sat on the sofa at the end of the day relaxing and watching TV, maybe eating food and not in the mood to have to keep constantly making decisions about what to watch you might not think that you are in a situation where Linked Data and SPARQL queries could be useful. Yet the flexibility of the data that can be obtained from data sources supporting these technologies makes them ideal candidates to power a Leanback TV experience. With the right query it is possible to curate a collection of video podcasts that can be played one after each other to keep the TV viewer happy. They still have control, they can still go to any podcast in the collection, but they are not faced with a decision every ten minutes about what to watch, allowing them to relax and discover new content.

When you are sat on the sofa at the end of the day relaxing and watching TV, maybe eating food and not in the mood to have to keep constantly making decisions about what to watch you might not think that you are in a situation where Linked Data and SPARQL queries could be useful. Yet the flexibility of the data that can be obtained from data sources supporting these technologies makes them ideal candidates to power a Leanback TV experience. With the right query it is possible to curate a collection of video podcasts that can be played one after each other to keep the TV viewer happy. They still have control, they can still go to any podcast in the collection, but they are not faced with a decision every ten minutes about what to watch, allowing them to relax and discover new content.

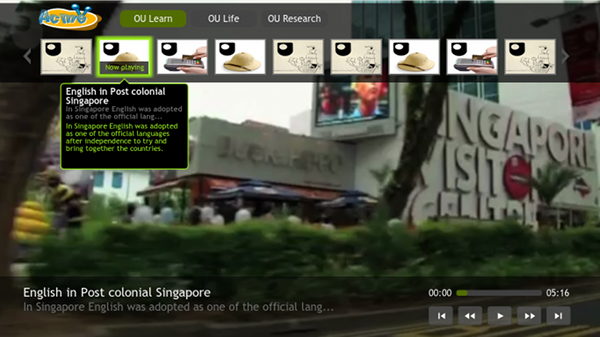

There are a few examples around of Leanback video players. Probably three of the best known are YouTube Leanback, Vimeo Couchmode and Redux TV. With these services a selection of videos is picked and put into a list. The videos play one after the other but usually it is possible for the viewer to skip videos or return to the one they like. They can also pick from "channels" which offer themed lists of videos, YouTube for example has channels for Trends and Best of YouTube. In the demo below I have used separate SPARQL queries to populate each channel.

The design of these services follows the principles of the ten foot user interface with large fonts, controls that can be navigated to with a simple remote control and also the user interface fades out and gives way to the main attraction – the video. It is for your TV, designed to be looked at from across a room rather than directly in front of you. When the user interface is in view the user will typically see a series of thumbnails representing each video in the running order. They can easily navigate to a particular video, but the idea is that they do not have to keep making decisions about what to watch – this is a half way house between broadcast TV and the traditional experience of watching videos on the internet.

The SPARQL queries for each channel are not just there to return a dataset but to curate an experience, a bit like how a museum curator would put together artefacts to tell a story rather that just saying something like "these are all of the objects we have from the 1800s". As SPARQL is capable of giving us quite fine grained control over the contents of a dataset that is returned (something that is difficult to do using RSS) we can think about the criteria for our video list very carefully.

In the demonstration web application (pictured above) I am using a data source from a university to build some channels of general interest content. I've also kept to short podcasts, filtering out any videos over ten minutes on one channel so if the viewer isn't very interested in a video they know it won't be too long before the next video starts. A university is a perfect example of a content provider that might provide very different types of video. It might produce short general interest items as well as videos of complete lectures. If you were aiming to build a channel of general interest items you probably wouldn't want an hour long lecture about advanced mathematics to be included, but if you were aiming at serving a specialised audience this might be exactly what you want. Fortunately all you need to do is change the SPARQL query to provide these very different channels.

The good news is that thanks to Google building a demonstration webapp to show off these ideas is nowhere as difficult as you might think. In my last blog post I mentioned the Resources for Google TV developers website which has example templates that you can adapt. For this example I adapted Template 2 from the site which pretty much gives us all of the code that we need to produce a leanback TV service using HTML5. Our main task here is to provide it with the right data. Note that this example only works on Webkit powered browsers such as Google Chrome. This is for two reasons, the videos that the demo points to are not encoded using a codec that Firefox can support in its HTML5 <video> tag and also the template uses some CSS3 properties which have not been fully standardised yet (and so start with "-webkit" though it may be possible to put "-moz" at the start of these properties to make them work in Firefox).

To get started download the code for Google TV HTML Template 2. For our demo we need to put this code on a server that supports PHP so copy the code to an area that your webserver can reach. Before we start on adapting the template to support SPARQL we need to do a couple of supporting tasks first. In the css folder open the styles.css file and add this property to .slider-photo: "-webkit-background-size: 105px 73px;". We need to do this as the thumbnails for the videos referenced in the data from the Open University (OU) SPARQL endpoint will be too large for the template and need to be scaled down.

A slight complication in this demo is that we will be using AJAX to retrieve the results of the SPARQL query, but most browsers enforce a same domain policy on such requests so hosting this service on one domain and trying to access the OU SPARQL endpoint would not be allowed as they have different web addresses. So here I have written an example script in PHP that sits on the same domain as this demo, but proxies the request to the remote domain. A better way to implement this in the future might be to use the CORS specification. An example proxying script is shown below, you'll need to adapt this to work on your server.

// Localhost proxy for AJAX clients

function request($url){

// is curl installed?

if (!function_exists('curl_init')){

die('CURL is not installed!');

}

// get curl handle

$ch= curl_init();

// set request url

curl_setopt($ch, CURLOPT_URL, $url);

// return response, don't print/echo

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

return $response;

}

if ($_SERVER['REMOTE_ADDR'] == '127.0.0.1') {

header("Content-Type: text/xml");

$sparql = $_GET['query'];

$sparql_url = sprintf("http://data.open.ac.uk/query?query=%s", urlencode($sparql));

echo request($sparql_url);

}

?>

When you download the template you get given an example playlist, but we need to replace this with our own list of videos. To do this we need to make substantial changes to the file dataprovider.js which is found in the "js" directory. This builds up a Javascript data structure that is passed to the template to provide the list of videos. We need to populate this structure from the results of a SPARQL query. The complete code for the new dataprovider.js is shown below, an explanation of how it works is shown after the listing.

/**

* @fileoverview Classes for DataProvider

*/

var gtv = gtv || {

jq: {}

};

/**

* DataProvider class. Defines a provider for all data (Categories, Images & Videos) shown in the template.

*/

gtv.jq.DataProvider = function() {

};

/**

* Returns all data shown in the template..

* @return {object} with the following structure:

* - categories -> [category1, category2, ..., categoryN].

* - category -> {name, videos}.

* - videos -> {thumb, title, subtitle, description, sources}

* - sources -> [source1, source2, ..., sourceN]

* - source -> string with the url | {src, type, codecs}

*/

gtv.jq.DataProvider.prototype.getData = function() {

function getRandom(max) {

return Math.floor(Math.random() * max);

}

function randomiseOrder(invideos) {

var outvideos = [];

while (invideos.length > 0) {

var index = getRandom(invideos.length-1);

var video = invideos.splice( index , 1)[0];

outvideos.push( video );

}

return outvideos;

}

function getSPARQLmedia(sparql) {

var videos = [];

var query_url = "http://localhost/ouleanback/sparqlproxy.php?query=" + escape(sparql);

// Make the request to the data server

xhr=new XMLHttpRequest();

// TODO: Make this asynchronous

xhr.open("GET", query_url, false);

xhr.send();

// results

xmlDoc=xhr.responseXML;

// parse the response

var results=xmlDoc.getElementsByTagName("result");

// loop round each results line

for(i=0; i

var video = {

thumb: '',

title: '',

subtitle: '',

description: [],

sources: []

};

var bindings=results[i].getElementsByTagName("binding");

for (j=0; j

var value = '';

if (bindings[j].getElementsByTagName("uri").length > 0) {

value = bindings[j].getElementsByTagName("uri")[0].childNodes[0].nodeValue;

}

if (bindings[j].getElementsByTagName("literal").length > 0 && bindings[j].getElementsByTagName("literal")[0].hasChildNodes()) {

value = bindings[j].getElementsByTagName("literal")[0].childNodes[0].nodeValue;

}

// assign values to object

if (key == 'source') {

video.sources.push(value);

}

else if (key == 'title') {

video.title = value;

}

else if (key == 'desc') {

if ( value.length > 60 ) {

video.subtitle = value.substr(0,60) + "...";

video.description.push(value);

}

else {

video.subtitle = value;

}

}

else if (key == 'thumb') {

video.thumb = value;

}

}

videos.push(video);

}

return videos;

}

var data = {

categories: []

};

// get OU Learn channel

var sparql = "PREFIX dc:

"PREFIX wdmedia:

"PREFIX rdfns:

"PREFIX skos:

"SELECT ?thumb ?desc ?title ?source " +

"WHERE { " +

" ?podcast

" ?podcast dc:title ?title . " +

" ?podcast wdmedia:description ?desc . " +

" ?podcast

" ?podcast wdmedia:createDate ?createDate . " +

" ?podcast dc:subject ?subject . " +

" ?podcast rdfns:type

" ?subject skos:inScheme

" ?podcast wdmedia:duration ?duration . " +

" FILTER ( ?duration \"00:10:00\" ) . " +

"} " +

"ORDER BY DESC(?createDate) " +

"LIMIT 25 ";

var videos = getSPARQLmedia(sparql);

var category = {

name: "OU Learn",

videos: randomiseOrder(videos)

};

data.categories.push(category);

// get OU Life channel

var sparql = "PREFIX dc:

"PREFIX wdmedia:

"PREFIX rdfns:

"SELECT ?thumb ?desc ?title ?source " +

"WHERE { " +

" ?podcast

" ?podcast dc:title ?title . " +

" ?podcast wdmedia:description ?desc . " +

" ?podcast

" ?podcast wdmedia:createDate ?createDate . " +

" ?podcast dc:subject

" ?podcast rdfns:type

"} " +

"ORDER BY DESC(?createDate) " +

"LIMIT 25 ";

videos = getSPARQLmedia(sparql);

category = {

name: "OU Life",

videos: randomiseOrder(videos)

};

data.categories.push(category);

// get OU Research channel

var sparql = "PREFIX dc:

"PREFIX wdmedia:

"PREFIX rdfns:

"SELECT ?thumb ?desc ?title ?source " +

"WHERE { " +

" ?podcast

" ?podcast dc:title ?title . " +

" ?podcast wdmedia:description ?desc . " +

" ?podcast

" ?podcast wdmedia:createDate ?createDate . " +

" ?podcast dc:subject

" ?podcast rdfns:type

"} " +

"ORDER BY DESC(?createDate) " +

"LIMIT 25 ";

videos = getSPARQLmedia(sparql);

category = {

name: "OU Research",

videos: randomiseOrder(videos)

};

data.categories.push(category);

return data;

};

This might look a bit scary, so let's break it down a little. Scanning through the code you will notice that there are three SPARQL queries that populate the three channels: OU Learn, OU Life and OU Research. These all populate a category object which gets pushed into the data that is returned to the template (this is a "channel" and has its own button at the top of the screen). The queries look for the newest twenty five podcasts in each channel. Note in the SPARQL query for the OU Learn channel the duration of the podcast is obtained and then used in a filter. Unfortunately this is supplied as a string property rather than a numeric property, but a string comparison is good enough to make the filter work. You should always test your queries with different values though to make sure that they behave as you expect in these circumstances.

Each query is then passed to the getSPARQLmedia() function which calls the proxy script which in turn calls the OU SPARQL endpoint. The XML results are parsed and the details of each video are added to the dataset returned. The javascript code used to parse the results here is very similar to the code I used with the Samsung Internet@TV platform in an earlier blog post. You will see that I have left a little "TODO" note in that function, there are many opportunities to improve how this script works and showing something like a "Loading...." notification while the script processes the data in the background one be one of them, as would some error handline - but I will leave that as a programming challenge for others :-)

The list of videos returned from the SPARQL query is then mixed up using the randomiseOrder() function so if you get someone who doesn't spend that long using the webapp, but returns frequently, they will still have a good chance of seeing something new. The data is then passed to the rest of the template and rendered and the first video started automatically and the viewer is then free to discover what is on offer. Note that to use the webapp you can just use the arrow keys and the Enter button to simulate a remote control.

As the idea of a Leanback website is new being able to take an existing template and populate it with data is very powerful. By taking an existing template and an existing, very flexible, source of data we can create a whole new way for people to discover content on offer, and discoverability can be a bit of an issue with web content. As the template is already built it is not necessary to justify large amounts of time building such a service, an experimental webapp can be built quite quickly.

If you are using Google Chrome or Google TV you can see this webapp running at http://labs.greenhughes.com/ldleanback, comments and questions are very welcome.

Photo: Tifosi by Sem Vandekerckhove